PROJECT STATUS: Funded project completed – non-funded longitudinal study underway (Updated: November 2023).

Communication is the essence of life. We communicate in many ways, but it is our ability to speak which enables us to chat in every-day situations. An estimated quarter of a million people in the UK alone are unable to speak and are at risk of isolation. They depend on Voice Output Communication Aids (VOCAs, or Speech Generating Devices, SGDs) to compensate for their disability. However, the current state of the art VOCAs are only able to produce computerised speech at an insufficient rate of 8 to 10 words per minute (wpm). For some users who are unable to use a keyboard, rates are even slower. For example, Professor Stephen Hawking recently doubled his spoken communication rate to 2 wpm by incorporating a more efficient word prediction system and common shortcuts into his VOCA software [New Scientist, Intel Press Release].

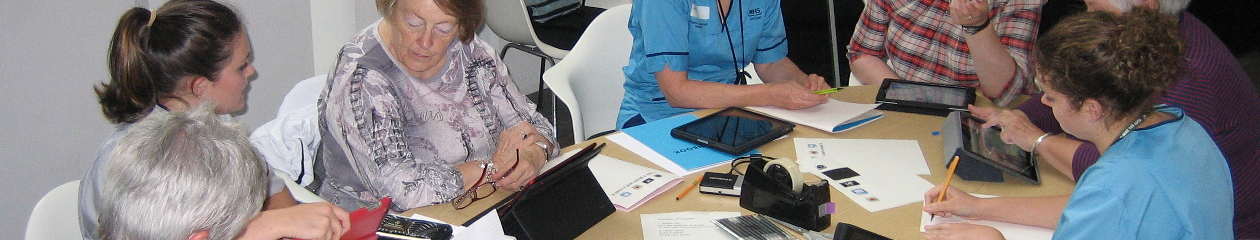

ACE-LP brings together research expertise in Augmentative and Alternative Communication (AAC) (University of Dundee), Intelligent Interactive Systems (University of Cambridge), and Computer Vision and Image Processing (University of Dundee) to develop a predictive AAC system that will address these prohibitively slow communication rates by introducing the use of multimodal sensor data to inform state of the art language prediction. For the first time a VOCA system will not only predict words and phrases; we aim to provide access to extended conversation by predicting narrative text elements tailored to an ongoing conversation.

This project will develop technology which will leverage contextual data (e.g. information about location, conversational partners and past conversations) to support language prediction. We will develop an onscreen user interface which will adapt depending on the conversational topic, the conversational partner, the conversational setting and the physical ability of the nonspeaking person. Our aim is to improve the communication experience of nonspeaking people by enabling them to tell their stories easily, at more acceptable speeds.

Funder

EPSRC, RCUK

EPSRC, RCUK

Call: User Interaction with ICT

EPSRC Reference: EP/N014278/1

Partners

![]() Arria NLG Ltd.

Arria NLG Ltd.

Aberdeen

![]() Capability Scotland

Capability Scotland

Edinburgh

![]() Communication Matters

Communication Matters

Leeds

![]() Edesix Ltd

Edesix Ltd

Edinburgh

![]() National Museums Scotland

National Museums Scotland

Edinburgh

![]() Ninewells Hospital & Medical School

Ninewells Hospital & Medical School

Dundee

Scope

Scope

London

![]() Smartbox

Smartbox

Malvern

![]() Tobii Dynavox

Tobii Dynavox

Sheffield

People

Professor Annalu Waller

Principal Investigator

(Dundee)

Contact: a.waller@dundee.ac.uk

Phone: 01382 388223

Professor Stephen McKenna

Co-Investigator

(Dundee)

Dr Jianguo Zhang

Dr Jianguo Zhang

Co-Investigator

(Dundee)

Dr Per Ola Kristensson

Dr Per Ola Kristensson

Co-Investigator

(Cambridge)

.

Mr Rolf Black

Mr Rolf Black

Researcher Co-Investigator

(Dundee)

Dr Sophia Bano

Computer Vision and Machine Learning

(Dundee)

Dr Zulqarnain Rashid

Interaction Designer/Software Programmer

(Dundee)

Dr Shuai Li

Research Associate

(Cambridge)

Mr Conor McKillop

Research Assistant

(Dundee)

Mr Hasith Nandadasa

Research Assistant

(Dundee)

Dr Chris Norrie

PhD Student/Web Admin

(Dundee)

Dr Tamás Süveges

PhD Student

(Dundee)

Ms Martina Peeva

MSc Student

(Dundee)

Ms Sarah Wadsworth

MSc Student

(Dundee)

Selected Academic Output

Suveges T, S McKenna (2021). Cam-softmax for discriminative deep feature learning. International Conference on Pattern Recognition (ICPR), January 2021, Milan, Italy (online)

Suveges T, S McKenna (2021). Egomap: Hierarchical First-person Semantic Mapping. 2nd Workshop on Applications of Egocentric Vision (EgoApp). In conjunction with ICPR 2020, Milan, Italy (online)

Kristensson, PO, J Lilley, R Black, A Waller (2020). A Design Engineering Approach for Quantitatively Exploring Context-Aware Sentence Retrieval for Nonspeaking Individuals with Motor Disabilities. CHI 2020 – Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems: Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems. New York: Association for Computing Machinery, 11 p. 398.

Bano S, J Zhang, S McKenna (2017). Finding Time Together: Detection and Classification of Focused Interaction in Egocentric Video. IEEE International Conference on Computer Vision Workshop (ICCVW). IEEE, p. 2322-2330 9 p. (Proceedings – 2017 IEEE International Conference on Computer Vision Workshops, ICCVW 2017; vol. 2018-January).

Waller A, R Black, Z Rashid (2017). Involving people with severe speech and physical impairments in the early design of a context-aware AAC system. 10 Sep 2017, p. 34. 1 p. Communication Matters : CM2017 National AAC Conference, Leeds, United Kingdom

Bano S, T Suveges, J Zhang, S McKenna (2018). Multimodal Egocentric Analysis of Focused Interactions. 25 Jun 2018, In: IEEE Access. 6, p. 37493-37505 13 p. IEEE Access

Black R, S McKenna, J Zhang, PO Kristensson, C Norrie, S Bano, Z Rashid, A Waller (2016). ACE-LP: Augmenting Communication using Environmental Data to drive Language Prediction. Communication Matters Conference, Leeds, UK. (Poster, Book of Abstracts at CM 2016)

Black R, S McKenna, J Zhang, PO Kristensson, C Norrie, S Bano, Z Rashid, A Waller (2016). ACE-LP: Augmenting Communication using Environmental Data to drive Language Prediction. Communication Matters Conference, Leeds, UK. (Poster, Book of Abstracts at CM 2016)

Black R, S McKenna, J Zhang, PO Kristensson, C Norrie, S Bano, Z Rashid, A Waller (2016). ACE-LP: Augmenting Communication using Environmental Data to drive Language Prediction. 17th Biennial Conference of the International Society of Augmentative and Alternative Communication (ISAAC), Toronto, Canada. (Poster; Abstract at ISAAC, anonymised)

Black R, S McKenna, J Zhang, PO Kristensson, C Norrie, S Bano, Z Rashid, A Waller (2016). ACE-LP: Augmenting Communication using Environmental Data to drive Language Prediction. 17th Biennial Conference of the International Society of Augmentative and Alternative Communication (ISAAC), Toronto, Canada. (Poster; Abstract at ISAAC, anonymised)

McGregor A (2016). No Further On After 30 Years, Still Can’t Speak Fast Enough. 17th Biennial Conference of the International Society of Augmentative and Alternative Communication (ISAAC), Toronto, Canada. (PDF of PowerPoint presentation)

McGregor A (2016). No Further On After 30 Years, Still Can’t Speak Fast Enough. 17th Biennial Conference of the International Society of Augmentative and Alternative Communication (ISAAC), Toronto, Canada. (PDF of PowerPoint presentation)

Other Public Output

Science Saturday at the National Museum of Scotland in Edinburgh, 10 February 2018: With support through the EPSRC Telling Tales of Engagement Grant we had a day of interactive information on AAC and AT at the National Museum in Edinburgh.

2019 : ACE-LP was featured on the Christmas Live BBC Click (about 14 minutes in).

YouTube: An introduction to ACE-LP (2016). Annalu Waller, Rolf Black, with Alan McGregor, University of Dundee, Press Office.

YouTube: An introduction to ACE-LP (2016). Annalu Waller, Rolf Black, with Alan McGregor, University of Dundee, Press Office.

Report on ISAAC (2016). Alan McGregor, Honorary Researcher at Dundee University.

Women in Computer Vision: Sophia Bano (2016). Computer Vision News, RSIP Vision.

(2016). Computer Vision News, RSIP Vision.

Press Cuttings

STV Six O’Clock News (5 Sep 2016). Susan Nicholson, STV.tv, player.stv.tv/summary/stv-news-dundee.

Speech aid advances could help end social isolation (3 Sep 2016). Kirsteen Paterson, TheNational.scot, Herald & Times Group.

Speech aid advances could help end social isolation (3 Sep 2016). Kirsteen Paterson, TheNational.scot, Herald & Times Group.

.

£1 million Dundee University project to improve communication devices (2 Sep 2016). Ciaran Sneddon, TheCourier.co.uk, DC Thomson Publishing.

£1 million Dundee University project to improve communication devices (2 Sep 2016). Ciaran Sneddon, TheCourier.co.uk, DC Thomson Publishing.

Social Media

Twitter: @DundeeAAC #DACELP

Facebook: facebook.com/DundeeAAC

Further Reading and Related Publications

Communication Access to Conversational Narrative (2006). Waller A. Topics of Language Disorders, Vol. 26, No. 3, pp. 221–239, Lippincott Williams & Wilkins, Inc. (PDF at Edinburgh University)

The WriteTalk Project: Story-based Interactive Communication (1999). Waller A, Francis J, Tait L, Booth L and Wood H. In Assistive Technology on the Threshold of the New Millennium. Christian Bühler, and Harry Knops (Eds.). IOS Press. (PDF)

Locked in My Body (2016). Terry Newbury is fully awake but not able to communicate with the outside world. This short observational documentary tells Terry’s story and his fight to communicate again. BBC iPlayer.

My Left Foot (1954). Christy Brown (Book)

The Diving-Bell and the Butterfly (1997). Jean-Dominique Baby (Book)