![]() A £1 million research project that aims to change dramatically the way people with no speech and complex disabilities can have a conversation with others has been launched by the Universities of Dundee and Cambridge.

A £1 million research project that aims to change dramatically the way people with no speech and complex disabilities can have a conversation with others has been launched by the Universities of Dundee and Cambridge.

Computer-based systems – called Voice Output Communication Aids (VOCAs) – use word prediction to speed up typing, a feature similar to that commonly found on mobile phones or tablets for texting and emailing.

However, for those with complex disabilities, including for example Professor Stephen Hawking, using typing to communicate can still be extremely slow, as little as 2 words per minute, which makes face-to-face conversation very difficult. Even with an average computer-aided communication rate of about 15 words per minute, conversations do not compare to the 150 words per minute speaking rate of people without a communication impairment.

It is estimated that more than a quarter of a million people in the UK alone are at risk of isolation because they are unable to speak and are in need of some form of augmentative or alternative communication (AAC) to support them with a severe communication difficulty.

“Despite four decades of VOCA development, users seldom go beyond basic needs-based utterances as communication rates remain, at best, ten times slower than natural speech, making conversation almost impossible. It is immensely frustrating for both the user and the listener. We want to improve that situation considerably by developing new systems which go far beyond word prediction” says Rolf Black, one of the project investigators at the University of Dundee.

Professor Annalu Waller from the University of Dundee, who is lead investigator for this research project, adds: “What we want to produce, for the first time, is a VOCA system which will not only predict words and phrases but will provide access to extended conversation by predicting narrative text elements tailored to an ongoing conversation.”

“In current systems users sometimes pre-store monologue ‘talks’, but sharing personal experiences and stories interactively using VOCAs is rare. Being able to relate experience enables us to engage with others and allows us to participate in society. In fact, the bulk of our interaction with others is through the medium of conversational narrative, i.e. sharing personal stories.”

Professor Stephen McKenna, also of University of Dundee, explains “we plan to harness recent progress in machine learning and computer vision to build a VOCA that gives its non-speaking user quick access to speech tailored to the current conversation. In order to predict what a person might want to say, this VOCA will learn from information it gathers automatically about conversational partners, previous conversations and events, and the locations in which these take place.”

Dr Per Ola Kristensson at the University of Cambridge’s Department of Engineering brings his extensive expertise in probabilistic text entry to the project. As one of the inventors of the highly successful gesture keyboard text input system for mobile phones, commercialised under many names such as ShapeWriter, Swype and gesture typing, he adds: “What I find truly exciting about this project is the way it will advance state-of-the-art techniques from statistical language processing to potentially drastically improve text entry rates for rate-limited users with motor disabilities.”

“This does not mean that the computer will speak for a person” adds Black. “It will be more like a companion who, being familiar with aspects of your life and experiences, has some idea of what you might choose to say in a certain situation.”

The project is named “Augmenting Communication using Environmental Data to drive Language Prediction – ACE-LP” and brings together research expertise in Augmentative and Alternative Communication (AAC) and Computer Vision & Image Processing at the University of Dundee with Intelligent Interactive Systems at the University of Cambridge.

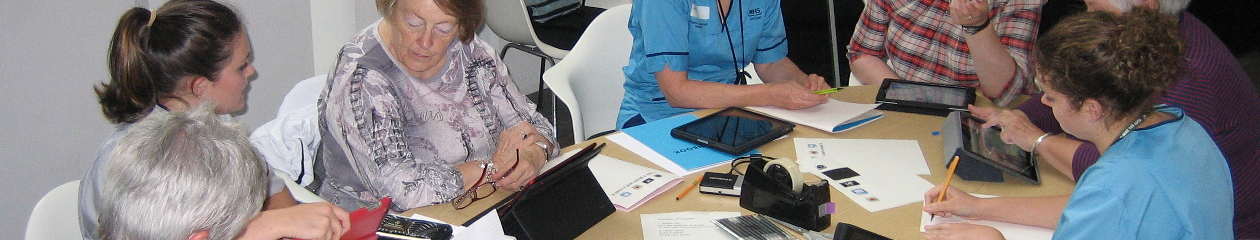

ACE-LP has a number of partners including Capability Scotland and Scope, the two leading charities for people with complex disabilities in the UK, and the ENT at Ninewells Hospital, NHS Tayside.

Industry partners include two of the world leading developers of VOCAs, Smartbox Assistive Technology and TobiiDynavox, as well as Arria NLG Ltd, the market leader in real-time data storytelling, and Edesix Ltd, a leading provider of advances Body Worn Camera Solutions, based in Edinburgh.

The Universities will also work with National Museums Scotland and the leading UK charity Communication Matters to ensure that the results of the research are communicated beyond the science communities into clinical work and mainstream knowledge.

This research project is funded by the UK Engineering and Physical Sciences Research Council (EPSRC).

Project web site: http://ACE-LP.ac.uk